Introduction to Cluster Usage

Luis Pedro Coelho

On twitter: @luispedrocoelho

What is a Cluster?

- A cluster is just a collection of machine that are networked together.

- They often share the same filesystem (which is a network file system).

- We will focus on the EMBL cluster system, but most academic clusters are similar.

- (Commercial companies increasingly use cloud computing.)

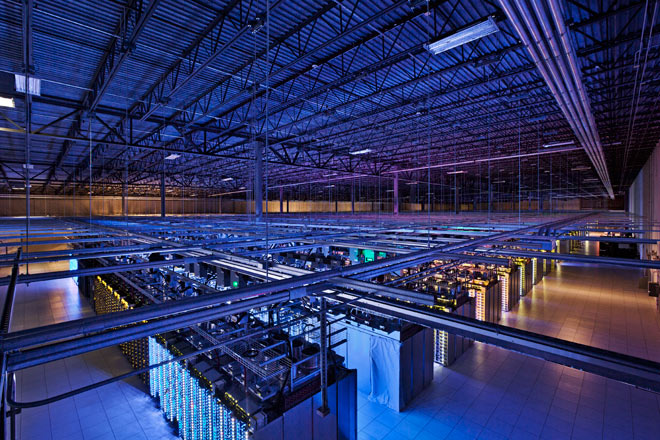

A server room in Council Bluffs, Iowa. (Photo: Google/Connie Zhou) from Wired

Queuing systems

- One head node, many compute nodes.

- Log in to head, submit scripts to queue.

- Queueing system will run your script on a free compute node.

Queueing systems are also called batch systems

.EMBL uses LSF

- LSF Documentation is at http://intranet.embl.de/wiki/LSF/Main_Page

- LSF Documentation

- GE (or SGE) is very popular too. Most of the concepts will be the same.

Unfortunately, many small details change between setups.

First Step: Let's all SSH to the head node

ssh name@submaster

You need to be inside the EMBL network for this to work (otherwise, you need to set up the VPN).

Using an interactive session

- Create a file in your home directory:

echo "Hello World" > file.txt

- Allocate a node for computation:

bsub -Is /bin/bash

We now depend on the cluster being free(ish). - Verify that your file is there. Create a new one.

- Exit and verify that your new file is also there.

Running our first job on the queue

(1) Create a file called script.sh with the following content:

#/bin/bash echo $HOSTNAME echo "My job ran"

(2) Make it executable:

chmod +x script.sh

(3) Submit it:

bsub ./script.sh

Checking up on your jobs

bjobs

Tells you what's going on

bqueues

Can check what's going on

bkill

Can kill your jobs

Output goes to email!

Perhaps not the best default, but you can change it

bsub -o output.txt ./script.sh

Do not compute on the head node

- The head node is shared by everybody.

- Any heavy computation will slow down everybody's work!

- File editing is OK.

- Small file moving is OK (but if it takes longer than a second, then write a script!).

- In case of doubt, submit it to the queue.

Do not compute on an unreserved compute node! That's even worse

Use the build node to build software

There is a special node in the cluster build000 which should be used to compile software.

ssh build000

Test your jobs before submitting!

This still happens to me:

- Submit a job

- Because the cluster is busy, it sits in the queue for an hour

- Then it promptly croaks because of silly typo!

A few ways to check

- Run on a small test file.

- set -n

- echo ...

Advanced Cluster Usage

- Job arrays

- Allocating resources

- Job dependencies

Job Arrays

- A job array is a way to take advantage of many machines with the same script.

- Clusters are ideal for embarassingly parallel

problems, which characterize many settings in

biology:

- Applying the same analysis to all images in a screen.

- BLASTing a large set of genes against the same database

- Parsing all abstracts in Pub Med Central

- ...

For small things, just run separate processes

#/bin/bash input=$1 grep -c mouse $inputAnd now run it many times, using a loop on the shell:

for f in data/*; do

bsub -o output.txt ./script.sh $f

done

How do job arrays work

- Write a script.

- Submit it as a job array.

- The script is run multiple times with a different index

- Use the index to decide what to do!

Detour: environmental variables

Have you talked about environmental variables so far in the course?

Do you know what they are?

- Environmental variables are variables that scripts can set & access.

- Example: $HOSTNAME

LSF uses variables to communicate with your script

- LSB_JOBINDEX

- This is the job index

- ...

- Check documentation

Exercise: write and submit a job for this process

- Input is a series of files named x00, x01, ..., x09

- Task is to run the same script on each and save results to output0, output1, ... output9

- In our case, the task is to count the number of occurrences of the word mouse

In particular,

- please copy the directory /home/coelho/bio-it-training/data/by-number to your home directory

- write a script which will execute for all outputs

grep -c mouse $input > $input.out

- Actually, you can start with the script count.mouse.sh that is already there.

Rarely is the input organized in such a nice fashion

Here is a more realistic scenario (1)

- Your input is a huge single file.

- Use split to break it up.

Rarely is the input organized in such a nice fashion

Here is a more realistic scenario (2)

- Your input is a list of files, but they have arbitrary names

- A few helpful shell commands:

- ls -1 > file-list.txt

- To get the fourth line of a file sed -n "4p" file-list.txt

- please copy the directory /home/coelho/bio-it-training/data/unordered to your home directory and write a script to count the number of mice in each of the files. Again, a script count.mouse.sh is present if you need to start somewhere.

Fail well

Common Unix strategy:

- Write your output to file.tmp, preferably on the same directory

- Call sync (!)

- Move to the final location

Unix guarantees that the move is atomic.

Rewrite the mouse count script to use the temp-move strategy

Remember to allocate resources

- CPUs (same machine or different machines)

- Memory

- GPU (graphical processing units)

- Time

- Disk

- Software licenses

- Network usage

How can you check how much memory your process uses?

- Guess-timate

- Measure (look at top)

Job dependencies

- You can schedule a job after another job has finished.

- Common setting:

- Extract some information from a large set of inputs (parallel)

- Summarise this information (textual/plot/&c)

- In our case, we summarize the mouse counts.

Shameless plug for jug

If you use Python, you may want to look at my package jug which can make running jobs on clusters easier

(Only makes sense if you're using Python.)

If you get stuck

- Look at help, stackoverflow, &c

- Ask somebody who knows

- Ask the help desk

- Ask me (coelho@embl.de)